AI Video and Stochastic Pareidolia

Music for Generated Metronome (11 Variations)

This was an experiment and I’m not sure it was a successful one. But it got me to thinking anyway: about time, and video, and the way time works inside an AI generated video as it is “uncompressed” — ie, “generated.”

AI-generated video is AI-generated images, plus time. That is, the images move — they’re animated. But time for a generative video model runs differently than it does for the rest of us. Internally, time is frozen: the movement of objects in a video, broken down into training data, becomes fixed like the paths of raindrops on a window.

When the model unpacks that, it has a certain tempo, but it’s been unclear to me how the speed of the video is determined. There’s a certain translation of movement as it gets frozen into place: the trail in any given frame represents a segment of time; the next snapshot represents another segment, and so forth. If the trail runs across the entire frame, the model can unpack that by however long the frame was meant to be - and the thing will move slower than a trail that moves ever-so-slightly in the same frame.

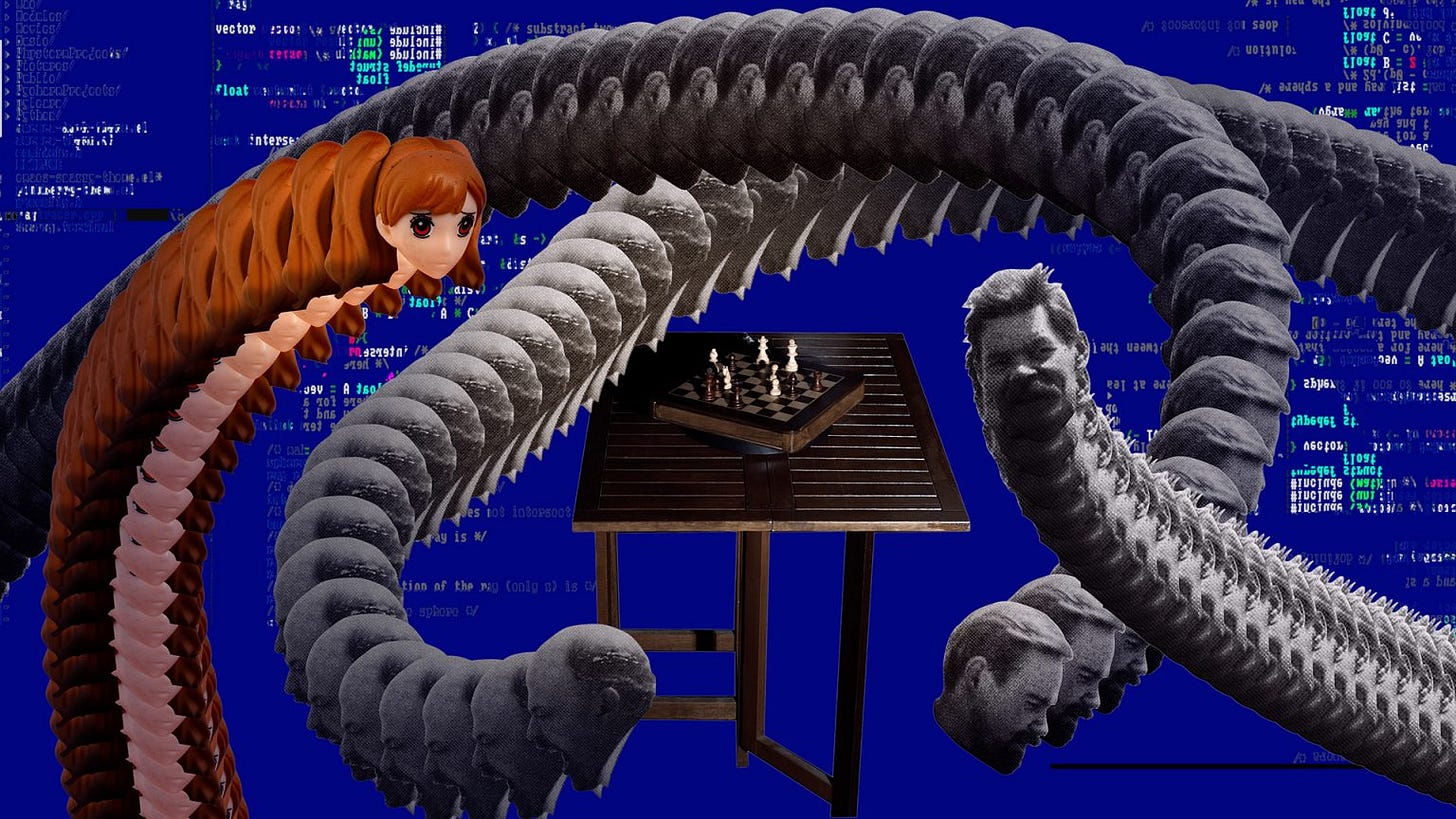

For example, above, you see a metronome with a needle in the middle, which is meant to sway back and forth to keep a steady tempo. Typically, the metronome clicks each time it hits the center. If we were to freeze a video of a metronome while training a video diffusion model (like Sora, or Gen 3, etc), we might capture a moment in time that looks like what we see above. The needle is not moving, but we can see the trace of where it moved. That movement is frozen as a range in a single frame, suggesting that the needle moves from side to side in that specific area in the time span that the frame of the video represents.

The needle would not appear beyond that range. But if you have thousands of styles of metronomes in the training data and the concept of a metronome based on those thousands of metronomes, the needle will not move reliably over any specific span of time.

The result explains the extremely strange behavior — at least in comparison to the real-world physics — from the needle in generated video of a metronome. The range of possible locations for a metronome is compressed, but the behavior of that needle is lost because it moves too quickly — the model is making a blur of a blur. The blur becomes a site where the needle could spontaneously emerge at any moment, and so in the resulting video, we see that. Pixels are drawn, and the model understands the rapid swaying of the needle between two points as potentially two needles, then animates two needles in that space.

This piece takes that at face value. I scored the ticking this metronome would have produced for its tempo, matching clicks to its movement from side to side. That track was then loaded into Udio, a diffusion model for music. Udio offers a “remix” function, but it is poorly named because the original track can stay intact. Instead, it adds layers of sound on top of it or shifts the tonal qualities of the audio track. So, for example, if I have a clicking metronome track, I can convert it to synthesizer noises or a human vocalist.

In the video above, that’s precisely what I did. Using the strange time signature of the generated metronome, I built a manual click track with a few different tonalities of click. I uploaded that to Udio and generated a variety of tracks that altered the click track while maintaining its underlying structure.

It’s important to note that a metronome is a way of keeping time. It isn’t a cue for a sound. Musicians who played to a metronome would be highly repetitive. The metronome sets the tempo for playing the notes, which can occur anywhere within the beat.

The result is 11 audio tracks that respond to the metronome's tempo. They add other elements, too — the video and audio tracks are not responsive. The metronome sets the tempo for which other audio pieces are generated. The tempo is so strange that Udio didn’t provide any kind of structure — nothing in here sounds like pop music.

To be clear, I also hope people find the futility of this exercise funny. I don’t know if I’d go so far as to call it “commentary” on relying on AI for tasks it wasn’t designed to do, but I think that’s a layer of it. Mostly I just wanted to think about time.

Stochastic Pareidolia

There’s an element of Pareidolia in AI: the tendency to perceive a specific, often meaningful image in a random or ambiguous visual pattern. The scientific explanation for some people is pareidolia, or the ability to make shapes or make pictures out of random noise. This is more akin to what a AI model does when it “hallucinates,” it finds a pattern that isn’t there. Of course this is precisely what image models do by design: they make mistakes, identifying patterns in noise that don’t actually occur.

In watching these videos, there’s a strange sense of coming in and out of alignment with the metronome, even though it isn’t supposed to be. The video isn’t responding to the music; the music is responding to the video awkwardly. The tempo track sets the tempo to which the other sounds are arranged. Each click of the metronome does not have to make a sound. But it is disorienting when that doesn’t happen and very satisfying when it does. This is kind of a sonification of the hunt for quality images when using AI. Mostly misses, then it hits, and then we move on. Here I want every sound to land on time, on the beat, but the audio model doesn’t work that way. Music isn’t structured that way: not every beat lands on the click of the metronome.

I’m also mindful of my role in the process. One thing I did in this piece was to operate as a stenographer. The video is generated, and I respond to the visual of the metronome to facilitate a pattern-analysis / improv session by the Udio audio model. It’s a complete inversion of what machines are supposed to do for artists. Of course, this is intentional — the thing I am doing is weird — but it still left me with a natural feeling of “why did I stay up until 3 am doing this?” afterward. The machine led, and I followed.

I’ve been revisiting and updating my Critical AI Images course from 2023—you can now get texts that replicate and expand on the video lectures—and thinking about this history of generativity and why generative art and the stripping away of human bias were so intriguing to artists of the genre. I consider myself one of them, but AI feels different, so it has cast me in a position of questioning what was so interesting to me about generative systems before AI.

It came down to building systems — environments in which sounds emerged. The work of art (work as a verb, as opposed to the noun in art work) was used to set up the system.

It did help me understand and think about the way time is processed by AI video: every frame is compressed into a tick of some clock hand. I don’t know how long a second lasts once it’s in the data, if the needle of a metronome could ever be rendered precisely, or whose time it would be keeping. What tempo might it be?

Anyway, this was a weird experiment, and I am not sure about the results. But I thought it helped me understand some issues around AI, time, video and sound.

This publication is free to read and share thanks to many hours of work from the author (me). If you’d like to keep this work alive, you can subscribe, share a link to your favorite posts, or upgrade to a paid subscription. Thanks!

Things I Am Up To This Month

October 18: Fantastic Futures Conference, Canberra, Australia

I’ll be attending the Fantastic Futures conference in-person in Canberra! I’m conversing with Kartini Ludwig, the Director and Founder of Kopi Su, a digital design and innovation studio in Sydney, and Megan Loader, NSFA Chief Curator, as part of the conference in Canberra, Australia, on October 18. I’ll follow up with some time in Melbourne and a potential speaking event with details to be confirmed. Fantastic Futures is sold out, but check it out anyway.

Oct. 26: Uppsala, Sweden Film Festival Screening

ALGORITHMIC GROTESQUE: Unravelling AI, a program for the Uppsala Short Film Festival, focused on “filmmakers with a critical eye toward our algorithmic society.” Great collection curated by Steph Maj Swanson aka Supercomposite. With films by: Eryk Salvaggio, Marion Balac, Ada Ada Ada, Ines Sieulle, Conner O’Malley & Dan Streit, Ryan Worsley & Negativland.

In-Person Lecture and Workshop:

14 Thesis on Gaussian Pop

Royal Melbourne Institute of Technology (RMIT)

Wednesday, October 23rd 12pm-3pm (Melbourne)

I’ll be discussing my 14 Thesis on Gaussian Pop and the state of AI generated music in conjunction with the RMIT / ARC Centre of Excellence for Automated Decision-Making and Society (ADM+S) exhibition “This Hideous Replica.” It will combine a lecture on the cultural and technical context of noise in, and the creative misuse of, commercial AI-generated music systems.

Details are to be announced. E-mail me directly if you’re especially eager to attend!

ASC Speaker Series:

AI-Generated Art / Steering Through Noisy Channels

Online Live Stream with Audience Discussion:

Saturday, October 27 12-1:30 EDT

AI-produced images are now amongst the search results for famous artworks, and historical events — introduce noise into the communication network of the Internet. What is a cybernetic understanding of generative artificial intelligence systems, such as diffusion models? How might cybernetics introduce a more appropriate set of metaphors than those proposed by Silicon Valley’s vision of “what humans do?”

Eryk Salvaggio and Mark Sullivan — two speakers versed in cybernetics and AI image generation systems — join ASC president Paul Pangaro to contrast the often inflexible “AI” view of the human, limited by pattern finding and constraint, with the cybernetic view of discovering and even inventing a relationship with the world. What is the potential of cybernetics in grappling with this “noise in the channel” of AI generated media?

This is a free online event with the American Society for Cybernetics.

Through Nov. 24: Exhibition: Poetics of Prompting, Eindhoven!

Poetics of Prompting brings together 21 artists and designers curated by The Hmm collective to explore the languages of the prompt from different perspectives and experiment in multiple ways with AI.

The exhibition features work by Morehshin Allahyari, Shumon Basar & Y7, Ren Loren Britton, Sarah Ciston, Mariana Fernández Mora, Radical Data, Yacht, Kira Xonorika, Kyle McDonald & Lauren McCarthy, Metahaven, Simone C Niquille, Sebastian Pardo & Riel Roch-Decter, Katarina Petrovic, Eryk Salvaggio, Sebastian Schmieg, Sasha Stiles, Paul Trillo, Richard Vijgen, Alan Warburton, and The Hmm & AIxDesign.

Through 2025: Exhibition

UMWELT, FMAV - Palazzo Santa Margherita

Curated by Marco Mancuso, the group exhibition UMWELT highlights how art and the artefacts of technoscience bring us closer to a deeper understanding of non-human expressions of intelligence, so that we can relate to them, make them part of a new collective environment and spread a renewed ecological ethic. In other words, it underlines how the anti-disciplinary relationship with the fields of design and philosophy sparks new kinds of relationship between human being and context, natural and artificial.

The artists who worked alongside the curator for their works to inhabit the Palazzo Santa Margherita exhibition spaces are: Forensic Architecture (The Nebelivka Hypothesis), Semiconductor (Through the AEgIS), James Bridle (Solar Panels (Radiolaria Series)), CROSSLUCID (The Way of Flowers), Anna Ridler (The Synthetic Iris Dataset), Entangled Others (Decohering Delineation), Robertina Šebjanič/Sofia Crespo/Feileacan McCormick (AquA(l)formings-Interweaving the Subaqueous) and Eryk Salvaggio (The Salt and the Women).

Are you a subscriber to Cybernetic Forests? Are you still reading for free? Why not consider upgrading your subscription to paid — to support independent artists and creative forms of research into AI?