Catastrophic Interference

Finding a poetry of paradigm change in a particular kind of machine learning failure.

Weight drives a neural network. If the system finds a piece of information linked with another more often, it assigns that relationship a numerical value: a weight. When the system sees no connections, it assigns it none. This is how much the relationship is "weighed." The system reinforces these relationships when it finds new material that supports that weight. Over time, we say the system has "learned" them.

But something interesting can happen when new information enters the system. The new associations can become so strong that they seem to readjust all previous weights — or abandon them completely. In machine learning, it's called "catastrophic forgetting." A model discovers one new relationship, and it's all it can see. There is another term for this, "catastrophic interference." That one moves the focus from the model (which forgets) to the data (which interferes).

Catastrophic interference is not adversarial. It is the simple product of information existing outside the model's previous framework. It is not even "weird data," per se. It's only weird to the system, and for how the system responds to it.

Researchers have a hard time explaining why this happens. It’s like if we forgot how to ride a bike when we learned how to swim. But thinking of catastrophic forgetting as a memory loss isn’t the most intuitive metaphor for me. For me, it’s Thomas Kuhn's cycle of scientific revolutions.

In the Kuhn cycle, "normal" science can accommodate a few new, unexplainable phenomenon within the existing “paradigm” of a scientific theory. New forms of life cause us to re-evaluate what we know about biology. But over time, as anomalies stack up, Kuhn says the field enters a "crisis period." If this crisis is not resolved within the existing paradigm, the paradigm shifts, and a new scientific paradigm emerges.

So maybe the field of machine learning needs a catastrophic forgetting of its own.

We have built AI from intelligence models that have origins in exclusion, foundations in racism and eugenics. Natasha Stovall writes this week that these ideas of "intelligence" that we model with AI favor "discrete capacities to sort, categorize, process, and remember verbal and visual information as accurately and quickly as possible." This definition of intelligence, which she shows has emerged from racist pseudo-science, has been discredited and discarded by other fields. So why does it persist in AI research?

One reason is that this definition of intelligence has built technically successful systems. It has also built a dense network of academic literature. To lose this would be a "catastrophic forgetting," a crisis demanding a re-evaluation of previous associations. Those outside of the accepted labels, or advocating for new ideas, might be understood as "catastrophic interference."

Good.

The field of AI has followed a single form of intelligence to create a single form of intelligence. We should be asking how to complicate that. AI research must adapt or face a wall of irrelevance. This pressure is essential. The alternative may well be an AI built on the "catastrophic memory" of eugenics, racism, sexism, and neurotypical imaginings for understanding the world. And such a system will inevitably perpetuate those logics in their repetition of actions built on the data describing that vision of the world.

So let the machines forget them. We have the information to reframe the foundations of what we know to meet what we are learning. There is no reason to build flawed applications on outdated knowledge. Instead, let's welcome and embrace the "interference" of a broader imagination.

It is an opportunity to push the limits of what and how we know. We will never have working AI without it.

Things I’m Doing This Week

This week I’ve taken an online course on cassette tape hacking offered up by Dogbotic, a Bay-Area based audio laboratory where “creative-driven inquiry meets inquiry-driven creativity.” This is not a paid ad, and they have no idea I am writing this, but I highly recommend it. In the first two hours I’ve learned more about the relationships between electronics, electricity and sound than anywhere else (and I have 18 hours left).

Things I’m Reading This Week

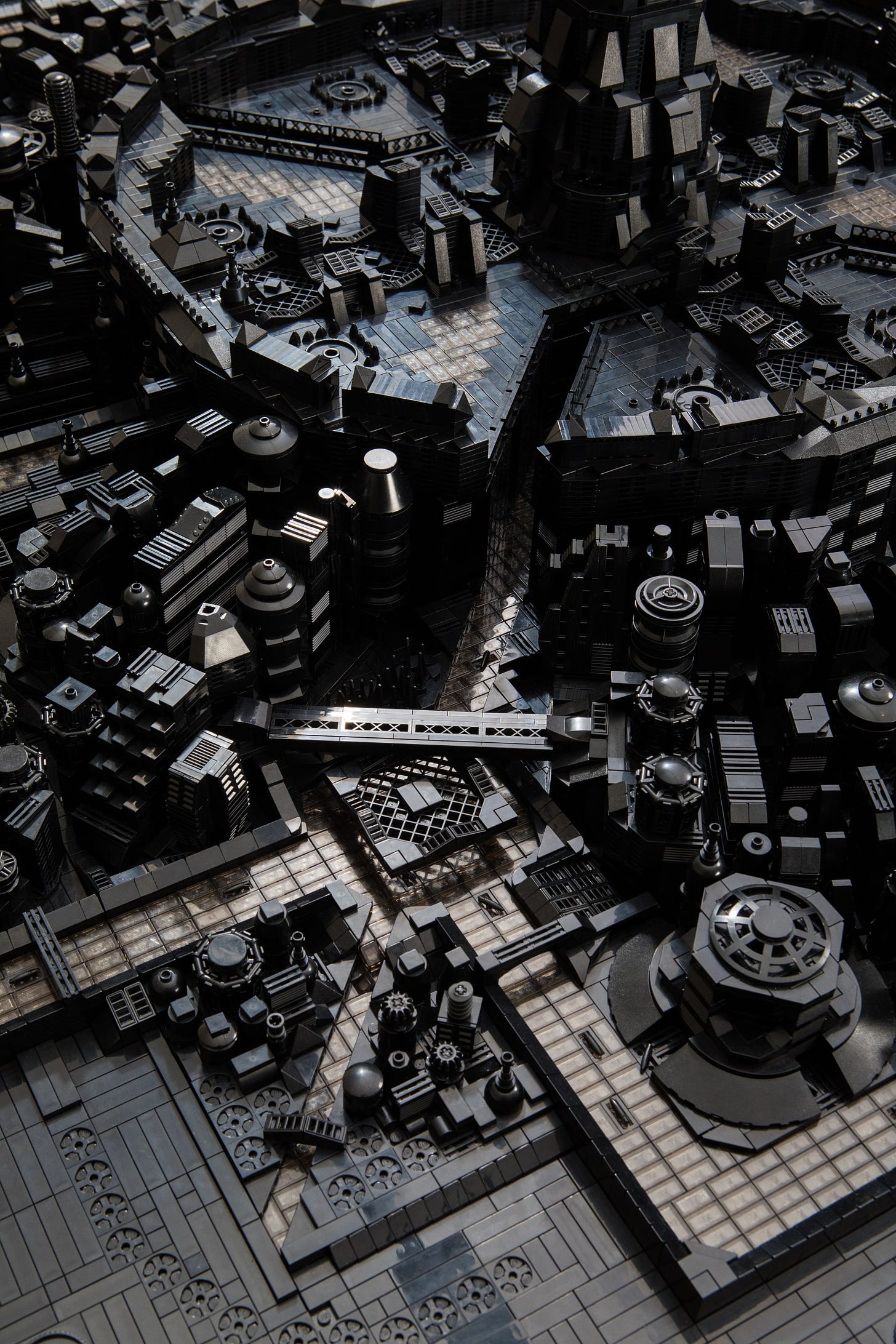

Building Black: Civilizations

Ekow Nimako

Artist Ekow Nimako recreates historical cities of Africa in Lego, moving them from the past and placing them into an imaginary science fiction future.

Constructed with 100,000 black Lego pieces, the 30-square-foot sculpture renders the lost trade capital into a futuristic, bustling metropolis with detailed references to the Islamic influences that shaped its architecture and history.

Towards a Digital Workerism: Workers’ Inquiry, Methods, and Technologies

Jamie Woodcock (h/t to Matteo Pasquinelli).

An academic article tracking the adoption of specific automation strategies. Woodcock argues that when two equally acceptable methods of automation are on offer, history has tended to choose those which maximize the control of management rather than emancipate workers. However, competition between strategies offers windows of opportunities for workers to use one tool against another. Woodcock looks at WhatsApp and Deliveroo as a case study for these tactics.

New technology can form part of ‘an electronic fabric of struggle’, as well as provide new ways to monitor and suppress. The key is understanding how workplace conflict has been changed by digitalization, and in turn what new forms of resistance and organization—in addition to traditional methods—will emerge in the political re-composition of the working class.

Laruelle: Against the Digital

Alex Galloway

Going niche here, but François Laruelle is a French philosopher who proposed that there is a “non-philosophy,” an alternative way of thinking that cannot be philosophized and comes from the refusal to philosophize. I’m working my way through this one, but I’m struck by his thesis: that there is a choice to engage in a reflective model of thought, rather than to stay immersed in simple being. If we take the questions plaguing us back to that question, how might we find different responses and positions? Galloway’s book interrogates this as a subject of digital logics. While pretty niche to digital philosophy, it’s interesting to me as a grounding position on the power of refusal, withdrawal, and going beyond false dichotomies of 0’s and 1’s.

The Kicker

I read a letter to the editor from a 1982 edition of COMPUTE! magazine. In it, the author mentioned his grandmother, Mary Ellen Bute, who had dismissed a computer artist featured in the previous issue as “uninteresting.” Mary Ellen Bute, it turns out, was creating the first electronic music videos, this one as early as 1938, using oscilloscopes and traditional animation techniques.

Thanks for reading! I’d love to hear comments or feedback. As always, feel free to share this post with others if you’re so moved. Or, if you’re finding me online, subscribe! I sent one post weekly.