Seeing Like a Dataset: Notes on AI Photography

When You Focus Twice as Hard on the Forest, You Get Twice as Much Forest

Photography has developed a certain set of rules, and a photographer’s eye tends to follow those rules when scouring the landscape for images. Through practice, the rules become instincts or habits. The camera, meant to capture what we see, changes how we see.

Mushrooms, too, have changed the way I see the forest. My birdwatching forays have been overwhelmed by a foraging instinct, shifting my attention toward the soil instead of the skyline. That’s when I recalled another way of seeing.

As an AI artist, I often take my own photographs of natural patterns found on walks along beaches or forest trails. These are for building a dataset of that outing, training a model, and then generating an extended, simulated wandering. I take a few hundred of these photographs at a time, because that’s what you need to train a generative adversarial network (GAN). In turn, these GANs will make a study of the pixelized arrangements of those natural patterns, assign them coordinates and weights, and then reconstruct these clusters and patterns into new, unseen compositions.

As a result of this practice, my vision as a photographer has shifted. The rules of photographic composition are pointless to an AI eye. Just as the camera used to shape how and what I saw, the AI — and what the AI needs — shapes it, too.

One learns to think like a dataset. You do not compose one image, you compose 500 — ideally 5000. With too much variation in your data, the patterns won’t make sense. The results will be blurred and abstracted. Too little variation, and you overfit the model: lots of copies of the same thing.

So, you seek continuities of patterns between each shot, with variations in composition. You want to balance similar proportions of the elements within the frame. You aim to balance the splashes of Apple-red maple leaves, patches of grass, and bursts of purple wildflowers, without introducing particulars.

This is an inversion of the photographer’s instincts, as well as the mushroom forager’s instinct. A photographer, and a mushroom hunter, will typically look for breaks in patterns. If I stumble across a mushroom, the instinct might be to capture it, on film or in a wicker basket. By contrast, the AI photographer looks away. The mushroom disrupts the patterns of the soil: it is an outlier in need of removal. The AI photographer wants the mud, grass, and leaves. We want clusters and patterns. We don’t focus on one image, we focus on the patterns across a sequence of images.

We want to give the system something predictable.

Extending the Forest

Prediction, whether it’s meant to generate photographs or inform a policy decision, is a matter of time and scale. Take enough photographs of mushrooms and one can start generating images of mushrooms. Cherry pick enough data points and you can find patterns to support any conclusions. The underlying principle is the same: the AI photographer looks away from the unique subjects of the world, declares them off limits, and looks instead to the patterns surrounding eruptions of variation.

This tells us something about the images we see an AI make. On the one hand, we might view it as flattening the world. On the other hand, it heightens my awareness of the subtleties of the dull. The singular is beautiful: birds, mushrooms, the person I love. Yet, the world behind them, the world we lose to our cognitive frames, is compelling in its own way.

Much of this background world is lost to us through what psychologists call schematic processing. We acknowledge that the soil is muddy and covered in leaves, and so we do not need to individually process every fallen leaf. Arrive at something novel in your environment, however, and you pause: what bird is that? Is that a mushroom rising from that log? What kind?

The benefit of schemas is also the problem with schemas. The world gets lost, until we consciously reactivate our attention. (Allen Ginsberg: “if you pay twice as much attention to your rug, you’ve got twice as much rug.”) An AI photographer is looking for schemas, looking for the noise we don’t hear, the colors we don’t see, the patterns that demand our inattention.

The Model Mind

AI pioneer Marvin Minsky used schemas to organize computational processes. Whatever the machine sensed could be placed into the category of ignorable or interruptive:

“When one encounters a new situation (or makes a substantial change to one's view of a problem), one selects from memory a structure called a frame. This is a remembered framework to be adapted to fit reality by changing details as necessary. A frame is a data-structure for representing a stereotyped situation like being in a certain kind of living room or going to a child's birthday party. Attached to each frame are several kinds of information. Some of this information is about how to use the frame. Some is about what one can expect to happen next. Some is about what to do if these expectations are not confirmed.” (Source)

Minsky took a metaphor meant to describe how our brains works, and then codified it into a computational system. The brain, in fact, does not “do” all of this. Rather, schemas are a shorthand devised to represent whatever our brains are actually doing.

Brains are not machines and do not work the way machines work. There is no file system, no data storage, no code to sort and categorize input. Rather, we’ve developed stories for making sense of how brains work, and one of those stories is the metaphor of schemas. The schema metaphor inspired the way we built machines, and somewhere we have lost track of what inspired who.

Today, when we see machines behave in ways that align with stories of how human brains “work,” we think: “oh yes, it’s just like a brain!” But it is not behaving like a brain. It is behaving like one story of a brain, particularly the story of a brain that was adapted into circuitry and computational logic. The machine brain behaves that way because someone explained human brains that way to Marvin Minsky, and Marvin Minsky built computers to match it.

As an indirect result of brain-metaphors being applied as instruction manuals for building complex neural networks, GANs behave in ways that align and reflect these human schemas. Schemas are not always accurate, and information that works against existing schemas is often distorted to fit. We may not “see” a mushroom when we expect only to see leaves and weeds.

Humans often distort reality to fit into preconceived notions. Likewise, GANs will create distorted images in the presence of new information: if one mushroom exists within 500 photographs, its traces may appear in generated images, but they will be incomplete, warped to reconcile with whatever data is more abundant.

A careful photographer can learn to play with these biases: the art of picking cherries. The dataset can be skewed, and mushrooms may weave their way in. We may start calculating how many mushrooms we need to ensure they are legible to the algorithms but remain ambiguous. A practiced AI photographer can steer the biases of these models in idiosyncratic ways.

The AI-Human Eye

AI photography is about series, permutation, and redundancy. It is designed to create predictable outputs from predictable inputs. It exists because digital technology has made digital images an abundant resource. It is simple to take 5,000 images, and ironic that this abundance is a precondition for creating 50,000 more.

As a result, the “value” of AI photography is low.

GAN photography is the practice of going into the world with a camera, collecting 500 to 5,000 images for a dataset, cropping those images, creating variations (reversing, rotating, etc.) and training for a few thousand epochs to create even more extensions of those 500 to 5,000 images.

It all sounds wildly old fashioned; the technology was cutting edge just three years ago. By comparison to diffusion models, it sounds as archaic as developing our own film.

The images you see in this post were not generated by GANs at all. Rather, images I collected for a GAN were uploaded to DALLE2 (a Diffusion based model) and extended through outcropping. The center of the image is real, the edges are not. I simply type “image of the forest floor” to produce as many as I would want to see.

It will be interesting to see if GANs become obsolete, overtaken by readymade, pre-trained models like DALLE2 that generate images from simple words.

But I hope not. Archaic and useless as it is, GAN photography is personal, like journaling or most poetry. The process is likely much more captivating for the artist than the eventual result is to any audience. GAN photography is a strangely contemplative and reflective practice.

It reminds me of Zen meditation techniques where we consistently redirect our attention to the air flowing in through the tip of our nose. The GAN photographer is constantly returning their attention to the details we are designed to drift away from, the sights we have learned not to see. The particular is often beautiful, but it’s not the only form of beauty. In search of one mushroom, we might neglect a hundred thousand maple leaves.

Beyond the Frame

There is something else at work, though. Beneath the images produced by GANs are a convergence of information and calculation, reduction and exclusion, that flattens the world. It’s one thing to produce images that acknowledge the ignorable. It’s another thing to live in a world where these patterns are enforced.

Beyond the photograph frame, this world would be wearisome and unimaginative. It’s a world crafted to reduce difference, to ignore exceptions, outliers, and novelty. It is bleak, if not dangerous: a world of uniformity, a world without diversity, a world of only observable and repeatable patterns.

The AI photographer develops a curious vision, steered by these technologies: a way of seeing that is aligned with the information flows that curate our lives. The GAN photographer learns to see like a dataset, to internalize its rules.

Through practice, the rules become instincts or habits. The data, meant to capture what we see, changes how we see.

The challenge for the GAN photographer is to put the camera aside. We have to focus between the abstraction of mental schemas and the observation of concrete details. We can scan the leaves for patterns and for the bright red cap of an amanita muscaria, against all odds.

Things I’m Doing This Week

November 9: Events!

I will be participating in two back-to-back public conversations on November 9: one on AI, creativity and imagination, and the other focused on mushrooms and designing for the nonhuman.

At 6pm CET (12pm in New York) I’ll be part of an online Twitter spaces discussion with Space10 to discuss AI. You can set a reminder or listen in here.

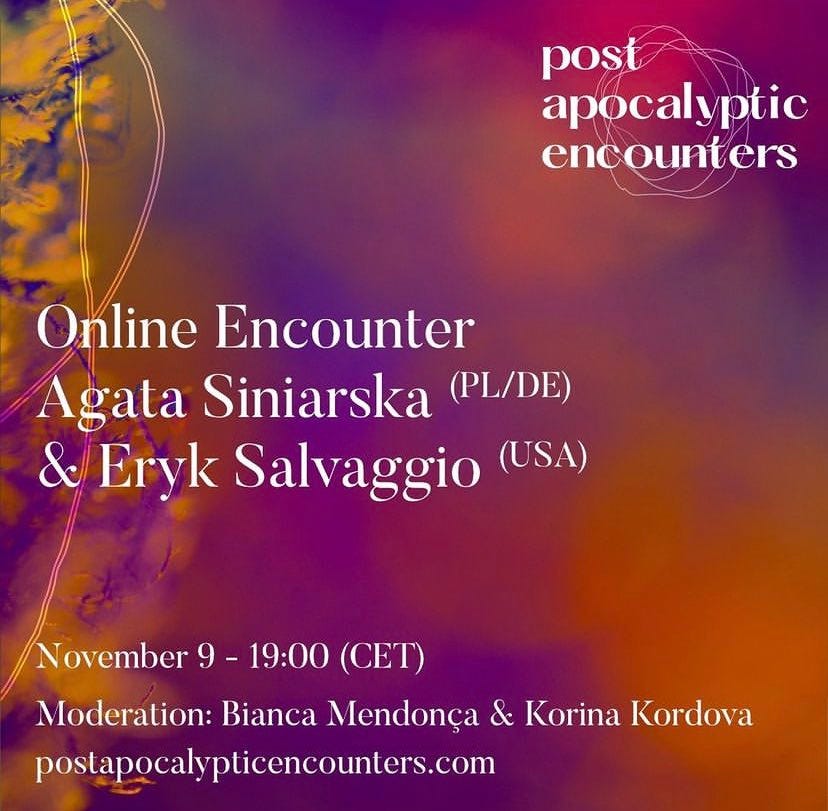

At 7pm CET (1pm in New York) I’ll be speaking with Agata Siniarska, of the Museum of the Anthropocene, for a recording of Post Apocalyptic Encounters. You can find details and stream that Zoom event here.

Finally, in Calgary that evening, look for some mushroom music to be featured on Ears Have Eyes, an experimental radio show and podcast out of radio station CJSW. Details here.

Busy Wednesday!

Thanks for reading the newsletter and, as always, please feel free to share, subscribe, like or comment so I know you’re enjoying what you get. If you’re keen to follow me on Twitter you can find me here, or join me (CyberneticForests@mastodon.social) on Mastodon. Thanks!