This newsletter is no longer run on substack. If you’d like to subscribe, please find the signup form on my own website, here:

You can find this specific post here.

This is a transcript from a seminar delivered on October 23 2024 at the Royal Melbourne Institute of Technology (RMIT) as part of a series of dialogues around This Hideous Replica, at RMIT Gallery. It was presented in association with ADM+S, Music Industry Research Collective, and Design and Sonic Practice. Thanks to Joel Stern for the invitation!

TODAY!

ASC Speaker Series

AI-Generated Art: Steering Through Noisy Channels

Online Live Stream with Audience Discussion:

Sunday, October 27 12-1:30 EDT

AI-produced images are now amongst the search results for famous artworks and historical events, introducing noise into the communication network of the Internet. What is a cybernetic understanding of generative artificial intelligence systems, such as diffusion models? How might cybernetics introduce a more appropriate set of metaphors than those proposed by Silicon Valley’s vision of “what humans do?”

Eryk Salvaggio and Mark Sullivan — two speakers versed in cybernetics and AI image generation systems — join ASC president Paul Pangaro to contrast the often inflexible “AI” view of the human, limited by pattern finding and constraint, with the cybernetic view of discovering and even inventing a relationship with the world. What is the potential of cybernetics in grappling with this “noise in the channel” of AI generated media?

This is a free online event with the American Society for Cybernetics.

November 1-3: Light Matter Film Festival, Alfred, NY

I’ll be in attendance for the Light Matter Film Festival for the North American screen premiere of Moth Glitch. Light Matter is “the world's first (?) international co-production dedicated to experimental film, video, and media art.” More info at:

November 1:

Watershed Pervasive Media Studio, Bristol, UK

It's an in-person AND online event, but I’ll be remote — and speaking on the role of noise in generative AI systems. Noise is required to make these systems work, but too much noise can make them unsteady. I’ll discuss how I work with the technical and cultural noise of AI to critique assumptions about "humanity" that have informed the way they operate. A nice event for discussion with an emphasis on conversation and questions.

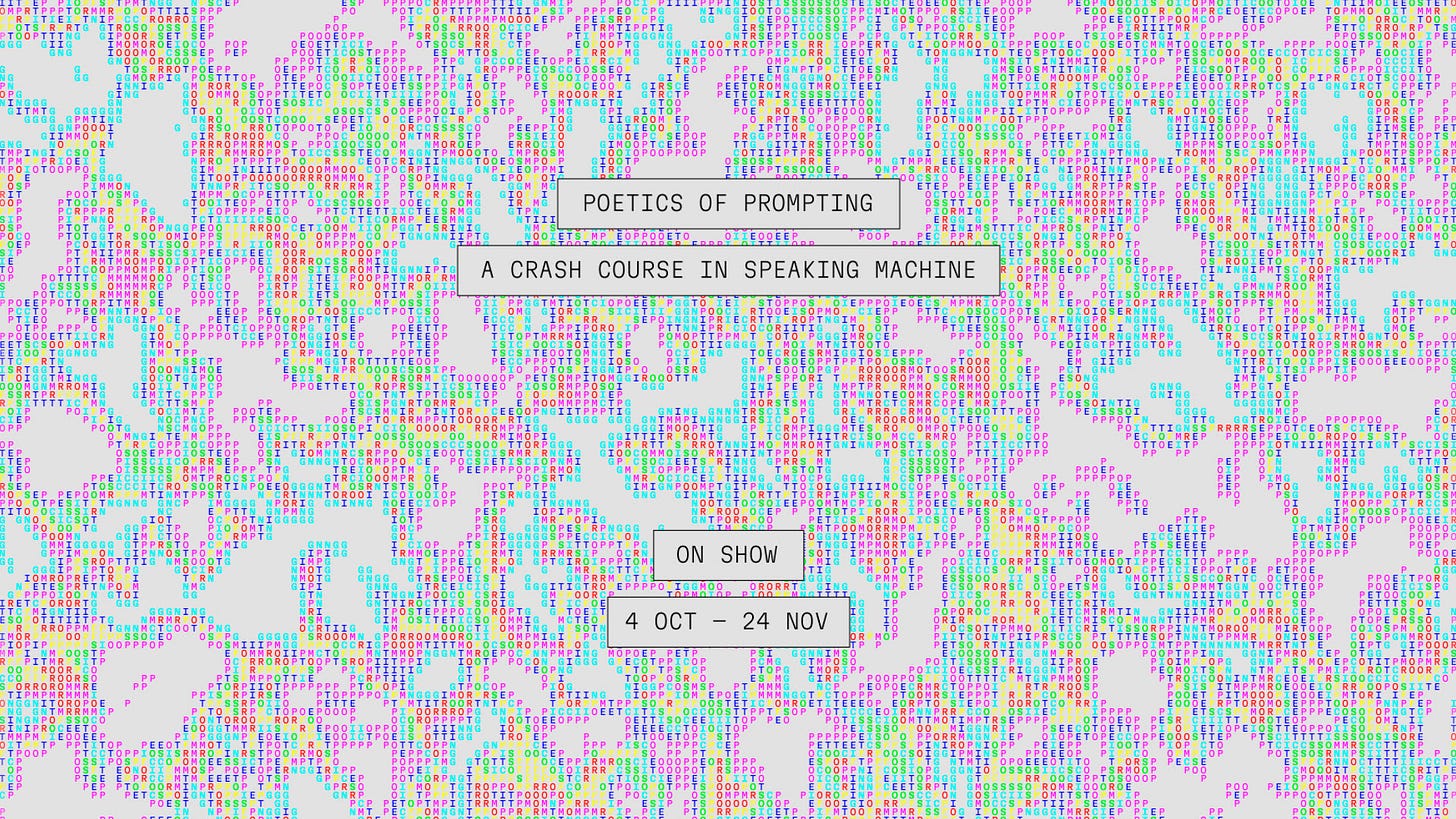

Through Nov. 24: Exhibition: Poetics of Prompting, Eindhoven!

Poetics of Prompting brings together 21 artists and designers curated by The Hmm collective to explore the languages of the prompt from different perspectives and experiment in multiple ways with AI.

The exhibition features work by Morehshin Allahyari, Shumon Basar & Y7, Ren Loren Britton, Sarah Ciston, Mariana Fernández Mora, Radical Data, Yacht, Kira Xonorika, Kyle McDonald & Lauren McCarthy, Metahaven, Simone C Niquille, Sebastian Pardo & Riel Roch-Decter, Katarina Petrovic, Eryk Salvaggio, Sebastian Schmieg, Sasha Stiles, Paul Trillo, Richard Vijgen, Alan Warburton, and The Hmm & AIxDesign.

Through 2025: Exhibition

UMWELT, FMAV - Palazzo Santa Margherita

Curated by Marco Mancuso, the group exhibition UMWELT highlights how art and the artefacts of technoscience bring us closer to a deeper understanding of non-human expressions of intelligence, so that we can relate to them, make them part of a new collective environment and spread a renewed ecological ethic. In other words, it underlines how the anti-disciplinary relationship with the fields of design and philosophy sparks new kinds of relationship between human being and context, natural and artificial.

The artists who worked alongside the curator for their works to inhabit the Palazzo Santa Margherita exhibition spaces are: Forensic Architecture (The Nebelivka Hypothesis), Semiconductor (Through the AEgIS), James Bridle (Solar Panels (Radiolaria Series)), CROSSLUCID (The Way of Flowers), Anna Ridler (The Synthetic Iris Dataset), Entangled Others (Decohering Delineation), Robertina Šebjanič/Sofia Crespo/Feileacan McCormick (AquA(l)formings-Interweaving the Subaqueous) and Eryk Salvaggio (The Salt and the Women).

If you’re a regular reader of Cybernetic Forests, thank you!

You can help support the newsletter by upgrading to a paid subscription. If you’re already a subscriber, extra thanks! You can also help spread the word by sharing posts online with folks you think would be interested.