The Pace of Change Depends on Your Gaze

Thinking about AI through scales of time instead of speed

One of my favorite things about living in Japan was the Shinkansen bullet train. From Fukuoka to Kitakyushu was a two hour train ride on regular rail, but 20 minutes on the bullet train out of Hakata Station. If I was short on time but flush with cash, the bullet train was a justified luxury. Otherwise, slow-rail it was.

Despite running on parallel tracks, the scenery changes with the pace of these trains. At high speeds, that which was closest to us was invisible, passing as a blur. I’d take photos with my 2010 digital camera and the images would be distorted, traveling too fast to catch on the sensor. On the slower train, you saw what you were missing: mostly, powerlines and lamp posts, the boring stuff of infrastructure. Focus a bit further out and your eyes don’t race. The mountains loiter while the fence disappears.

This image came to mind while thinking about the human sense of scale and its association with time. In a blitz of technological progress, it’s tempting to try to see the lamp posts as they speed by. Try to catch it, though, and it’s inevitably distorted: moving faster than the human eye, or 2010-era digital camera, can catch it. Every day some influencer is trying to blow your mind with whatever they’re posing as the next game-changer. Doomerism, and hype, collide with the incentive to tell you: everything is moving fast, don’t be left behind. You’re left chasing after a bullet train.

My contrarian take on “things are moving so fast in the AI space” is that this speed is determined by where we cast our eyes on the landscape.. The details blur by, but the structures outside the cabin stay the same, and so does the destination. Perspective can help us slow down and see what it is that we can work with, as opposed to what passes so fast we can’t see it.

Consider the panic and euphoria that emerged around Sora, OpenAI’s video generation model. Sora was, fundamentally, a diffusion model — the same things we’ve seen since DALL-E 2 — combined with tokens that could track motion, a variation on a technique used in Large Language Models. Slight variations on an LLM, or tweaks on a diffusion model, don’t change the fundamentals of these systems. The structures of these machines have been in place since the 1940s, at least in theory. They’re slightly different today, but in the past 20 years, it’s the availability of data that has changed, not major breakthroughs in architecture.

There are limits to that infrastructure, though some argue they will be transcended if we pile up enough data. But data collection can’t change the structure of these systems. They are not embodied, not capable of reason.

Of course, there is also real risk in complacency. But I think it is more risky to become so overwhelmed by speeding lamp posts from the train that you miss your stop. Meaningful changes require time. Every “new thing” quickly becomes too much, but we can and should slow down our attention to the never-ending spectacle and focus on the longer arcs. Strategies for challenging tech encroachment are harder when you’re disoriented.

These trajectories allow us space to anticipate the use of technology without being surprised or disoriented, and to advocate for steering those arcs. What is the trajectory of technology companies — what do they push forward or off track? We can look at recent and even not-so-recent history. From this vantage point, every increase in training efficiency and processing power is part of a consistent pattern, not a derailment. When we’re anxious we grab on to every new piece of information we can find. That just amplifies the anxiety, as we end up focused on what’s coming instead of thinking about what is present.

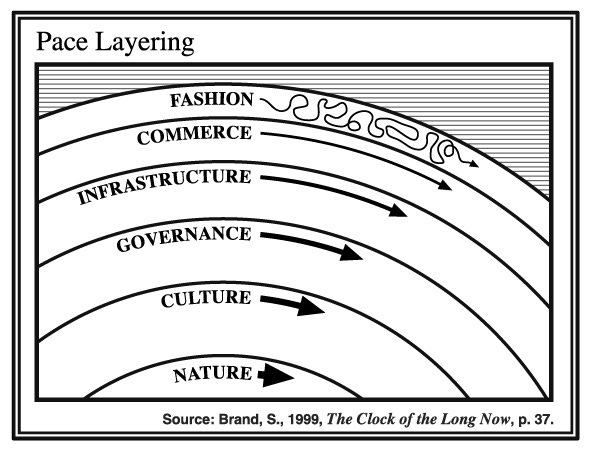

So what is present? What shifts quickly and what shifts slowly? Obviously, the pace of technological change is not a new idea. One visualization of this comes from Stewart Brand — a complex character in the tech space, but his 1999 visualization (maybe we can thank Brian Eno for it, too) of how time moves across systems is worth referencing.

At the bottom, the slowest to shift, is nature. Culture moves over this, slowly, and as it moves it drags the motion of governance. Governance, in turn, churns infrastructure. Stewart/Eno presume infrastructure drags commerce, and that fashion, in turn, is chasing commerce. Of course, these are not all followers. Fashion’s speed can fuel commerce; commercial needs can fuel infrastructure, and infrastructure can lead to new ways to govern it, which in turn carries certain cultural affordances for how they’re used. And culture defines nature, but we won’t go there.

AI Fashion

AI hype operates at the speed of fashion, while AI itself can only move at the speed of infrastructure. AI hype zigs and zags applications of the same setups: using ChatGPT to do some novel thing, like writing a book or recipes, might get headlines. You’ll see this every time someone uses ChatGPT to write a book of poetry. You see this in new trends where people use diffusion models to create deep fakes or political propaganda.

These things have all relied on the same infrastructure, but were made dizzying by moving at the pace of fashion. The problems seem new, but are not: fake images have been with us, and the scale of their circulation is fueled by the infrastructure of social media. But when your eyes are darting from one third-party app, or one new form of abuse, and then another and another, it can be overwhelming. That it is delivered to us using technologies designed for a deluge only amplifies this.

The point is not that these aren’t problems, or that novel uses don’t emerge. The point is that we mistake every new fashion trend for a new form of infrastructure, when they’re just new colors splashed on LLMs or Diffusion. That makes it exhilarating and exhausting, but it also overstates the pace of actual change.

You can use an LLM from 2020 and make it offer medical advice, that doesn’t mean “AI can now give medical advice.” We are better off keeping our eye elsewhere, because fashion shifts at a dizzying rate, and its trends can collapse just as quickly. The point is: every new thing someone does with AI isn’t a new emergent capability, or a new infrastructure.

AI Commerce

Slower, and more concerning, is the speed of commerce in response to AI. There are two factors to this. First, the companies which monetize their products, and benefit from the hype cycles that come with each new model release. A new model is chiefly the same architecture with more data, though sometimes it is applied to novel uses. GPT4 was more data than GPT3. DALL-E 3 was more data plus a link to GPT4. This is combinatorial: new products made by swirling together existing products, with improvements.

The other side of AI commerce is how industries outside of AI respond to the lead of tech companies. Some industries and companies will chase fashion trends. Others will slow down, moving into the infrastructure phase of development. In the US, commerce pushes infrastructure, with governance in response. That’s why it matters that we reverse this for AI.

Commerce behaves predictably. It aims to increase profit by minimizing costs, and much of that definition of cost is labor. There are no revelations that will come to us through looking too closely at the pace of commerce. I find things like the OpenAI board drama, or acquisitions, to be interesting details. But they aren’t where meaningful social input happens, or if so, only rarely.

Because I am interested in the ramifications of tech, I prefer to focus on governance and infrastructure. These do in fact move more slowly than fashion. But without infrastructure in place, AI companies won’t be able to build the things they want to build. This is a site of productive tension, and it is also where the rest of us start to have meaningful leverage. So this is where I aim my attention.

AI Infrastructure

AI infrastructure, in the absence of deliberate participation, is planned by AI companies but implemented by external industries. Infrastructure can and should be contested. Part of the infrastructures of AI include the ways it can be used by these external industries, and we have seen powerful examples of labor challenging the policy infrastructures and organization infrastructures within the Hollywood system. We are seeing legal scaffolding arise through lawsuits.

Education is also infrastructure. When schools invest time into teaching kids AI, they are in fact building infrastructure for commerce and AI companies: an educated workforce. Paired with that education, we hope, we see a focus on the even slower paces of change: culture, which includes values and what we consider civic responsibility.

Unless we are designing AI ourselves, infrastructure and governance is the real pace of AI, and it does not move at the same speed for all people. Creating participatory systems at the infrastructure level is the most viable space for most of us to get involved: activism, calling legislators and/or protesting for data rights, striking, or even being the AI killjoy in your company, school or other workplace, are all ways of shaping what will eventually emerge as the infrastructure that AI companies will rely on.

Infrastructure can drive policy when it is developed by commerce, but sometimes, government drives infrastructure through funding and support — see the World Wide Web or the US National Highway System.

AI Governance

In responding to infrastructure, we prod governance and policy changes. AI is governed, in one sense, by the companies who build it. They develop content moderation policies and determine appropriate uses, dictate what to share and what to keep proprietary, what data they can use. That is governance at the commercial level, and yes, it moves faster than the rest of us. Democratic governance is inherently responsive, but we can and do create frameworks and blueprints that influence funding.

It may not always seem like it, but the US government is more powerful than AI companies. It can build infrastructures and decide how the public should benefit. Examples include the airwaves, and how sections were sold, while other parcelled off for public broadcasting and local news programming.

This is the spot where I look for what’s happening in AI spaces. Things here move slowly, but already, we’re seeing meaningful AI questions entering the legal system. The FTC is determining the extent of data rights protections by those of us who use social media: does Reddit have the right to sell our data for a technology that didn’t exist when we signed up?

This is why policy matters, even if it isn’t as fast as fashion. Keep trying to find the latest AI breakthrough or latest iteration of a well-known disaster, and you can miss sight of where you have real power.

AI Culture

Culture determines values, and who gets to be included in the processes of governance. It moves even more slowly than government. But it can also steer through its wake. Because more powerful systems inherently move at a slower speed.

The culture of AI shapes the longest trends of all. This is how AI is approached by these companies, the “culture of AI,” which is addicted to datafication: categories, labels, reduction. In the culture of AI, we see the real goals of how it determines its own internal governance, and how it views infrastructure — chiefly as extractive, with services chiefly a mechanism for collecting and measuring. AI culture descends from a long history of technologies that aimed to replace labor and atomize knowledge into discrete segments. (I’ve written about this culture in depth).

But culture is much, much bigger than AI culture. It includes all kinds of ways to navigate technology, adopt and resist technology. The education sector is part of this, too, and can be a contributor to the humanities or to AI culture — or somewhere in between.

Too often, fashion and culture become confused. I think this is where the danger and distractions really fall. The permanently online “culture” is really fashion, but it’s a fashion that has become overwhelming as offline culture has become diminished. That’s a combination, I think, of a trend toward isolation and exhaustion fueled by the double whammy of the capitalist hamster wheel and a global pandemic.

Culture is big, but we lose sight of the fact that we are living within it. It’s the land rolling out in front of us that barely seems to move, but reaches beyond what our eyes can see. We need to learn how to see culture again, rather than to try to interpret fashion under its broader rules.

AI Nature

If we are looking solely at what might eventually end AI as we know it, nature is one such limit. There is only so much water to drink and heat that can be released. But there is also the limits of physics, which as much as I can tell do not change. New materials for hyperconductors might make things possible in computers that current systems do not. But nature will have the final say in how long AI survives, because it will have the final say over all of us.

One of the interesting twists of AI in this space is that many assert that it is AI itself can abolish the steadiness of natural time, by imagining a sentient AI ending all life on Earth as we know it before we do it to ourselves. This mistakes the rate of fashion for the pace of technological infrastructures. Each new tweak is fashioned as a step toward sentience, and simultaneously toward paradise and annihilation.

Conclusions

There are real, and very urgent, concerns about AI that we need to pay attention to. My concern for time scales is not meant to suggest leisure. It’s meant, rather, to say that much of the speed of AI is designed to disorient us from finding leverage and the footing from which we might assert it.

Infrastructure, once cultivated, can quickly calcify. But reacting to the buzz of social media travesties or incredible tech bro pronouncements isn’t what brings those risks under control. So I look here.

But part of this onslaught is a consistent pace of real harms, from discriminatory algorithms to job losses. There are real crimes and tragedies, and are only ascribed to “fashion” because of the speed with which they happen. The question is a matter of how we perceive time in relationship to our ability to move through it. We cannot outrace the Shinkansen: not even our eyes can do that.

Of course, proximity shifts things, too. If an automated surveillance system is being deployed in your community, you have leverage to stop it through all of these other scales. Time moves differently for different communities, and solidarity matters. It can slow that time down, moving it from fashion to culture through the assertion of our own focus. Collectively, we create culture: and we slow things down together.

Bonus: What if the Moon Got Mad?

Last week before going to sleep I posted a silly Tweet, and then woke up to see it had gone viral — and picked up some angry responses from the types of people who think AI will become a superhuman intelligence and destroy the world. One of them said, “Way to double down on random claims instead of applying critical thought,” so I decided to explore what truly doubling down would look like.

It looked like making a song and music video. It was a good excuse to test out some new AI music software while I was at it. Here it is, with an exclusive bonus ending for newsletter subscribers.

Things I Am Doing In April

Dublin, Ireland April 2 & 3: Music Current Festival

Flowers Blooming Backward Into Noise, my 20-minute “Documanifesto” about bias and artist agency in AI image making, will be part of an upcoming event at the Music Current Festival in Dublin. The Dublin Sound Lab will present a collaborative reinterpretation of the original score for the film alongside the film being projected on screen. It’s part of a series of works with reinterpreted scores to be performed that evening: see the listing for details.

I’ll also be leading a workshop for musicians interested in the creative misuse of AI for music making, taking some of the same creative misuse approaches applied to imagery and adapting them to sound. (I’m on a panel too - busy days in Dublin!)

London, UK: All Month!

As part of my Flickr Foundation Residency I’ll be in the city of London for the majority of April, with a few events in the planning stages in a few UK cities. I will keep you posted when dates are final! But if you’re in London, Bristol, or Cambridge, keep a lookout. If you’re anywhere else (especially Scotland!) let me know if there’s anything I can do up there. :)

Sindelfingen, Germany April 19, 21: Gallerie Stadt Sindelfingen

For the “Decoding the Black Box” exhibition at Gallerie Stadt Sindelfingen, which is showing Flowers Blooming Backward Into Noise and Sarah Palin Forever, I’ll be giving an artist talk, “The Life of AI Images,” at 7pm on the 19. On the 21st, tentatively, I’ll be part of a daylong workshop showing new work linked to “The Taming of Chance,” a project by Olsen presented alongside new works by Femke Herregraven, the !Mediengruppe Bitnik, Evan Roth & Eryk Salvaggio, who will be there in person.

Thank you again for your perspective on this.

It's a very interesting thing to contemplate all these differing speeds moving all at the same time, where we can possibly choose to step between bullet train and ambling at nature-speed by a cognitive change in perspective.