Norbert Wiener is often cited as the original tech-solutionist, and cybernetics is often cast as prototype for the worst impulses of today’s most extractive policies by tech companies. But it’s helpful to recall that Wiener’s Cybernetics was written in a time where the idea of data was remarkably different. It’s also worth noting that Wiener was among the first to outline where we might want to draw boundaries between machines and human activities. It is arguable that his refusal to apply cybernetics to military projects is why cybernetic projects didn’t receive the kind of funding that the military would later grant to so-called “AI” projects.

One of the questions Wiener considered was the question of adaptive machines, specifically: who was doing the adapting? The question could not be solved once, he wrote, “but must be faced again and again”:

The growing state of the arts and sciences means that we cannot be content to assume the all-wisdom of any single epoch. This is perhaps most clearly true in social controls and the organization of the learning systems of politics.

— God & Golem Inc. (82) 1964

Cybernetics was not chiefly interested in the study of artificial minds or prosthesis, but the study of feedback. Wiener suggests that social arrangements, political ideologies, and even individual preferences are not designed for homeostasis (that is, balancing systems toward the preservation of some existing state of affairs). They’re designed to change, and any machines built to preserve that stability must be adapted to the changes, though the systems themselves are “made with the intention of fixing permanently the concepts of a period now long past.” (83) He describes the ideology of the “gadget worshipper” (53) as one who is impatient with the unpredictability and uncontrollability of the human race, and who seeks to replace it, as quickly as they can, with the more malleable and subservient machine.

Cybernetics famously emerged from a post-war craving for stability, but Wiener notes that cybernetics is “anti-rigidity,” and instead emphasizes response. Here he raises the question of extermination of all human life:

“If, for example, the danger is a remote but terminal one to the human race, involving extermination, only a very careful study of society will exhibit it as a danger until it is upon us. Dangerous contingencies of this sort do not bear a label on their face.”

I don’t know if Norbert would have been seduced by the longtermist gambit of mitigating unarticulated existential risks on a far-off horizon. There seems to be some degree of reasonable alignment between ideas like “the alignment problem” and Wiener’s thinking, save for one: as a science of feedback and communication, is it possible to articulate human purpose, and human failings, from the perspective of one particular moment in time with its particular social consensus?

The Robots Will Kill Us

It seems like we keep talking about robots killing everyone. Rogue AI systems convincing us to launch nuclear weapons at each other, or manipulating human actors into wittingly or unwittingly developing and executing global weapon systems.

The Center for AI Safety issued a very short statement last month, the entirety of which reads: “Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

There are, at least, two camps on this: one believes this is a “long term extinction risk,” that is, further-away and total; the other sees the risks of AI as less total but already immediate. One sees it as a problem that we can resolve through thought experiments and hypotheticals, one sees the solution strictly in establishing careful feedback mechanisms.

The emphasis on existential risk — of “killing everyone” — makes today’s AI assisted deaths and misery seem minor by comparison. The idea of AI killing everyone isn’t new, but the newest ideas are better funded. It comes from experts who believe these stories, and whom, for whatever reason, the public defers to as experts; though where one gets expertise in global annihilation is uncertain: as Wiener notes, there are no experts in atomic warfare because we have not experienced an atomic war.

I first heard about existential risks around 2015, the same time that a Neural Network beat Super Mario Brothers without any instructions in a special Super Mario Brothers Championship. Neural Nets are useful when you don’t have a good model: they analyse and reveal possible patterns in large datasets that you might not be able to analyze yourself. Uncovering the pattern of button mashing was a pretty cool bit of engineering.

It came on the heels of CleverBot, a chat app that learned from the conversations people had with it. It eventually earned high marks on the Turing Test, winning headlines like “Software tricks people into thinking it is human.” So that was the state of things in 2015, when we started seeing letters warning about the future of artificial intelligence from the likes of Stephen Hawking and Elon Musk, from the same institute (whom, full disclosure, I briefly worked for) that wrote (again) a letter warning about AI killing everybody.

At the same time, cities were integrating racially biased, automated risk-assessment systems to determine bail and automated facial recognition programs that disproportionately leveraged false accusations against black men.

But the idea that computers could be biased was quite controversial, with many machine learning startups offering, for example, data-centric ML systems for hiring decisions as if they would eliminate biases. The systems for bail and arrests were also touted as being “unbiased,” but the appeal of “unbiased” policing systems comes into question when they continue to be used long after their biases are proven.

Bias in AI was “controversial.” But what was quite obvious, it seemed, was that a technology whose state of the art was beating Super Mario World’s donut level, and a chatbot akin to what today roughly bombards us with Spam on social media sites, could someday destroy humanity.

Why is this belief so prevalent?

I support the idea that it shifts conversations on regulation away from actionable policy arenas into abstractions: guiding principles of “alignment,” and vague assurances that we must design systems that “remain in our control.” Of course this true. But why not focus on applying that directly, to the systems we have today — rather than hypothetical future machines, for a hypothetical society whose values, social systems, and politics we have no way to anticipate?

Sam Altman, CEO of OpenAI, did not sign the Future of Life Letter, but recently signed the much shorter one. But in reporting from an event (that has now been scrubbed from the Internet) Altman called “for regulation of future models, [but] he didn’t think existing models were dangerous and thought it would be a big mistake to regulate or ban them.” (Emphasis mine).

The argument to focus on the future certainly defers discussions of today’s policy and regulation. But the move toward focusing on an abstract future version of the technology from current perspectives is also dangerously close to locking in a trajectory for politics and social order that ensures the stability of the current system. Which would be great, if the current system worked for everyone. But the current system does not work for everyone.

Locking in a rigid policy course serves two ends. It simultaneously lands immediate policy conversations in fuzzy, subjective language instead of focusing on clear harms to what we hold dear in the current moment. It also proposes that we, right now, are the ones who are so clear-headed and masterful as to decide what all future generations will need or prioritize. This seems desirable if current arrangements work for you and your offspring. But it is also a way of locking that power in over those who lack power in the current system.

It is not coincidence that this language often implies that there are a limited number of trusted stewards who can safely develop AI — typically, the large, well-funded tech companies of today.

Grift or Delusion?

Recently Miles Brundage, who works with policy at OpenAI, questioned the cynicism of this link between existential risk and avoiding regulation. As he puts it: “How would regulating less help with the killing thing?”

I don’t think it is about regulating more or less but what is regulated and how. But I think it is also more complex than pure cynicism. I don’t think anyone wakes up thinking about how to secure power over the oppressed. Ideology is more pernicious than that. It comes from a variety of diffused incentives.

Sure, communication teams might be deliberately linking existential risk as a strategy to avoid discussing the deeper issues at stake for people on today’s planet. It’s their job to come up with frames that win the media discourse. But someone has to believe those stories — including many engineers and computer scientists who ought to know better. So how does one come to the conclusion that the highest priority for humankind is to stop the very technology that they are working to build?

To understand that belief, it is helpful to view “AI will kill us all” more as a convenient, self-reinforcing delusion than a purely cynical misdirection. That delusion reinforces egos by elevating the importance of the work, and makes every decision messianic: more direct social harms end up diminished by contrast.

This is the power of convenient belief as response to cognitive dissonance. Folks may not be consciously engineering an ideology — not that it matters — but find it emerges from the ways they justify the compromises of their work. It is a comforting myth.

Obviously, AI is not a threat to human survival, or the folks writing these letters would just stop developing it and focus on limiting it, rather than writing letters about how they really ought to stop. It can’t be an immediate concern to them, at least not equal to the level of importance they are asking the rest of us to place on it.

Assume that there is no such thing as pure evil or pure stupidity at play. Instead, it is helpful to see it as the slow self-seduction of the story we tell ourselves about our choices.

When we show up to work, in any field, day after day, we can become innately attuned to the history of our methods and the decisions that justified them. The real harms are understood as trade-offs: all of the decisions we have made were better decisions than the next-worst possible decision.

That expertise start to resemble an ideology: best practices, the defense of trade offs framed by binary choices, the idea of who you help or harm, what goals and priorities are, all emerge to form a narrative, an ideological perspective, that seeps into our thinking. You have to build the system a certain way because if you didn’t, it would be much worse. But the question of “do we build this system?” is answered on the day you start building it.

You Never Make the Worst Choice on Purpose

In this context, people always design systems whose outcomes are the best they could envision within realistic constraints. Any confrontation with negative impacts is still contextualized as the better choice over the choices you didn’t take.

It is a consensus that speaks to the very problem of predicting what is best for the future. Rooms of AI engineers, who have a particular background and frame for understanding current problems, also define the boundaries on what “current problems” are. There is a lot to be said for the diversity of perspectives in machine learning: getting more people from different backgrounds into the rooms where these decisions get made may be helpful in minimizing the harms to the communities they come from.

But it is also worth questioning the very idea of “the room” as the site where these decisions are made. In any environment, there is a risk that people stop seeing the system for what it does and instead see how it was designed, a cumulative story of past compromises and decisions. These stories build on a self-sustaining framework: a paradigm that isn’t reassessed unless catastrophe forces a reassessment.

Who, and what, defines a catastrophe? We see few open letters from tech CEOs about the devastation that a false arrest brings to a family, or what multiple false arrests bring to an entire community. On the other hand, the workers in this companies were essential to steering IBM, Microsoft and Amazon boardrooms away from face recognition technologies.

Real change requires the active solicitation and response to feedback: a shift from models of deployment to models of involvement. Because when we are inside of a problem, or a story, we can struggle to see the full context of that problem or the story. Our expertise can form a boundary of focus that cuts out what lay beyond.

The extinction of the human race in the distant future also poses another flattering question: What happens if you weren’t there to make those decisions? What happens if your story ends — and others do it wrong? It’s interesting precisely because it is decontextualized from the daily decision-making grind. It elevates your role from careful steward of a larger system to that of one of the few protectors of humankind. It also elevates the system you know, the values you hold, over all other permutations. It suggests anxiety around the change that time — and new ideas — might bring.

Might the culture of safety engineering reward those who catastrophize harms and prioritize local & physical risks, those within the purview of the system boundary, over diffuse social health that may be several times removed from the systems they deploy? In any industry, the best engineers are meticulous worriers. They build successful careers because they are uniquely talented in worrying about failures — albeit typically about looming physical catastrophes. That culture makes sense for engineering safety mechanisms in a chemical plant.

In contrast, AI’s physical catastrophes are diffused and remote. They don’t have to the guy next to you on the factory floor. They happen in different continents, different communities, on time scales wherein you may never even learn about them. If the lens of focus is on the immediate boundaries of the system, it is hopelessly constrained. The decisions of algorithms are simultaneously written into media, law, prisons, health care, education, the military, loans, job applications, careers and who has them.

So the problem of these existential concerns — over the local, immediate concerns — is one of culture. The culture of the safety engineer, focused on abstract future catastrophes with unarticulated causes, is increasingly prioritized over the culture of the harmed, who exist on the peripheries of algorithmic impacts.

Caroline Sinders puts it this way:

“Who are regulators inviting to speak to them right now — and what are those talking points? E.g., the White House meeting with only industry, the fawning over Sam Altman. That might seem like one data point … but it’s big. Because internally that conversation is going to get referenced over and over again, and no amount of research or think pieces can sway someone’s mind once they’ve decided something. So how government treats these creators, and who they deem to be legit, and what are important talking points, matters.”

Should We Think About the Future?

I have been involved in futures thinking for years, integrating it into my consultancy and design practice, typically in tech and policy spaces. Futures thinking is powerful in the same way that any creativity is powerful. It takes us outside of the context we have built for our situations. It encourages distance from our own story, which gives us space to discover new ones.

Futures thinking is meant to disorient us from current ruts. Futures thinking cannot be a simple exercise in prediction and extrapolation of our own perspectives. A trajectory is obvious, but change has to be shaken loose. But sometimes, even the best futures thinking exercises can be pure escapism. More than futures thinking, AI needs a collective “but what about right now?” reset. We can track trajectories of real harms, happening in the world as we speak, and predict them forward.

Even better, we can work together to create a new imaginary of AI — one that isn’t oriented in labor displacement and the extraction and exploitation of ill-gathered data. The future is obviously worth considering. But where do we draw the lines on the priorities of present-day suffering? If you are a population for whom AI is already being used to track and control your everyday movements, is that not already a dystopian reality? Such uses are possible without AI “slipping out of our control,” a which isn’t yet remotely possible — despite the very familiar hype of “new” Turing-Test beating chatbots and Neural Net marvels.

Wiener’s warning about the gadget worshippers is worth remembering:

“It is the desire to avoid the personal responsibility for a dangerous or disastrous decision by placing the responsibility elsewhere: on chance, on human superiors and their policies, which one cannot question, or on a mechanical device which one cannot fully understand but which has a presumed objectivity. It is this that leads shipwrecked castaways to draw lots to determine which of them shall first be eaten. It is this to which the late Mr. Eichmann entrusted his able defense. It is this that leads to the issue of some blank cartridges furnished to a firing squad. This will unquestionably be the manner in which the official who pushes the button in the next and last atomic war, whatever side he represents, will salve his conscience.” (54)

Let philosophers and artists daydream about the future. But the future cannot be the scapegoat for the present, and we can’t blame future catastrophe on the sentience of machines we may never build. Policy should prioritize today’s human suffering over future hypotheticals. It can do this by creating systems for feedback and adaptability, rather than policies that extend today’s thinking into eternity. These are decisions that must be faced now, and then again, and then again.

Stuff I Am Doing This Week

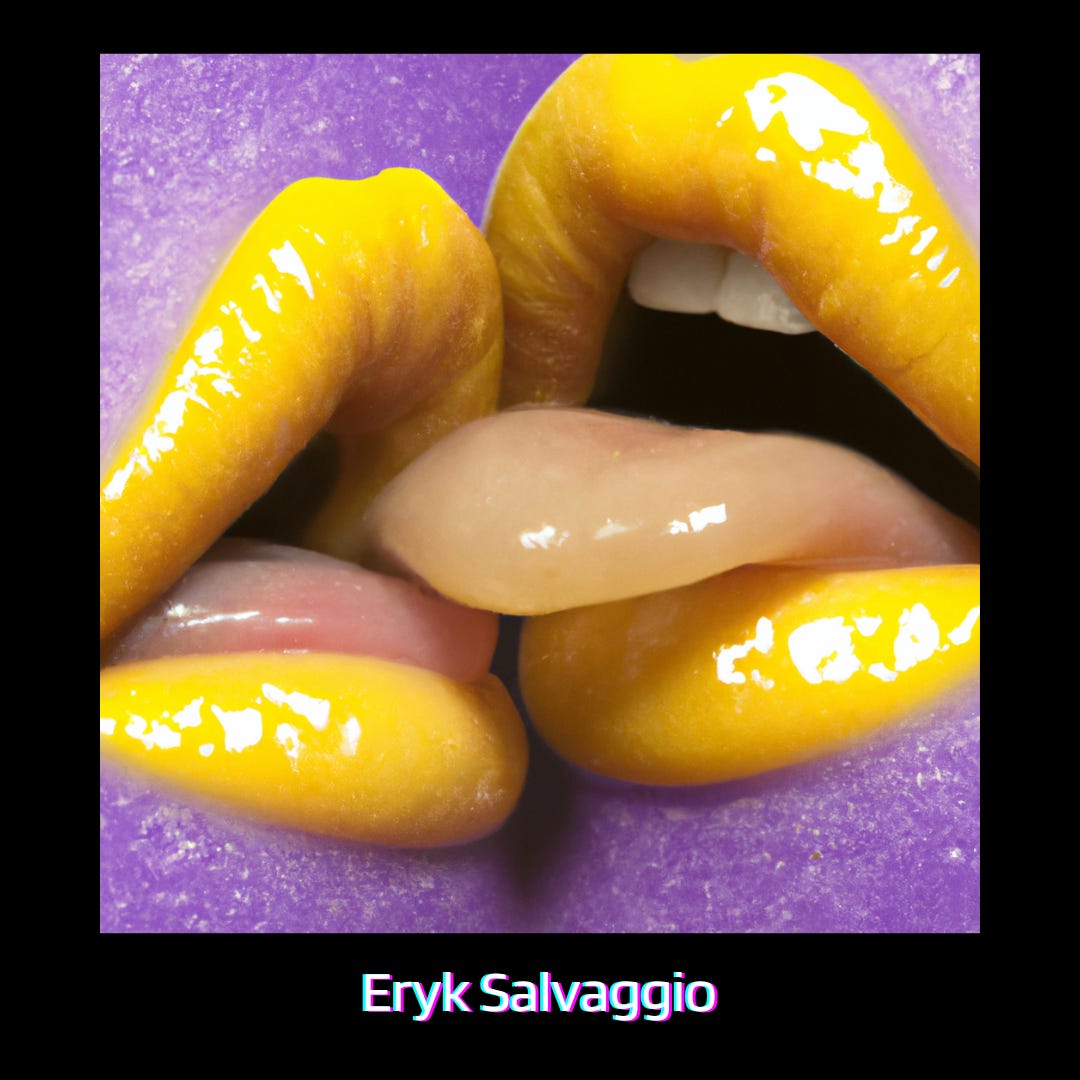

I have work in the upcoming DAHJ virtual exhibition, A Kind of Alchemy: The Work of Art in the Age of Artificial Intelligence, opening with an online VR or browser-based event on June 6. You can find a series of images, collectively titled Spurious Content and Visual Synonyms, which were the results of efforts to test the limits of content moderation in AI image generation tools. The results are unsettling, with an uncanny, fetishistic quality as a result of using prompts thats distract the system from what it is intended to be limiting. (There’s nothing explicit in the selected work). It’s part of an ongoing series of images made by trying to explore the boundaries of these systems.

IMAGE Issue 37

How To Read an AI Image has been published by IMAGE, in a journal special issue that relates synthetic images and text to media studies lens. It’s a great issue with many other excellent pieces, and if you’re involved in any kind of ML research, or in visual / media / cultural studies, you’ll find something insightful.

NorthSpore Mushrooms Interview

I was interviewed by my friend Will Broussard for NorthSpore Mushrooms, talking about Worlding and mycological art-making! Check it out here.

Flowers Blooming Backwards Into Noise

Finally, I’d like to share a short “documanifesto” I made in lieu of an artist’s talk for The Forking Room in Seoul, for its exhibition, “Adrenalin Prompt.” The film discusses how diffusion models work, my concern with the categorizational logic of these systems, and how my work aims to respond and undermine that logic. Hope you enjoy it!

Thanks for reading! Unsure of how steady updates will be over the summer, but I am still here. :)