Buried between the images of television broadcasts is the 14-billion-year-old echo of the universe popping open: the microwave radiation unleashed by the Big Bang. We hear it on the radio, and it’s rendered on TV as snow, or a battle of ants, or a sand storm, depending on where you live. The history of the universe is tucked between things, until technologies accidentally reveal them.

Refik Anadol’s AI Art work, Unsupervised, recently acquired by the Museum of Modern Art in New York, is a tall, smeary smorgasbord of pixel splash. AI as pure data visualization. Here, the work is a movement of pixels representing visual information: the spaces between artworks.

“Anadol trained a sophisticated machine-learning model to interpret the publicly available data of MoMA’s collection,” the curators write, explaining that as “the model ‘walks’ through its conception of this vast range of works, it reimagines the history of modern art and dreams about what might have been—and what might be to come.”

As was pointed out by Ben Davis in a quite sober assessment of the work, “one evolves endlessly through blobby, evocative shapes and miasmic, half-formed patterns. Sometimes an image or a part of an image briefly suggests a face or a landscape but quickly moves on, becoming something else, ceaselessly churning.” Davis calls the piece “an extremely intelligent lava lamp.”

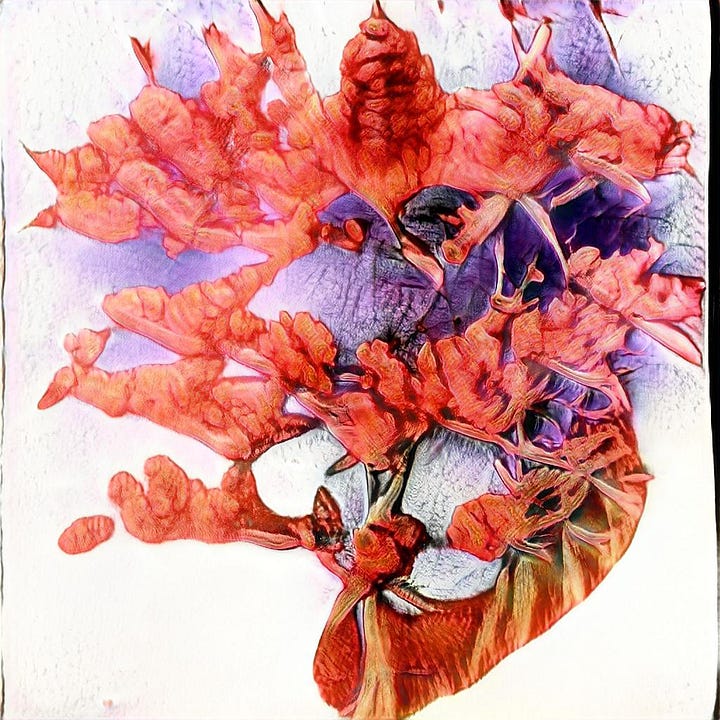

It looks like this:

The “walk” of the curators’ description, and the “blobby” miasma, describe the motion the AI creates as it transitions from one image to the next. This gloopy in-between state is something I’ve observed in GANs. When the model loses any sense of pattern, it might render shapes like bird’s nests made out of twisted clay ropes.

Here are four images of my own, from some experiments in 2019-2020. This is the output of a highly dis-correlated dataset: the images in the training data were incredibly diverse, though tended toward flowers and images of dancers (reflections of the public domain material I was using in more direct digital collages at the time).

We can note a few things. One, they look painted, though there isn’t a lot of paint in the original dataset. Two, there is a tendency to clump and seek. That’s what I call the patterns of texture that stick together, connected by networks formed by a meandering lattice. Clumps are patterns of texture, seeking is what we see in the lines of the lattice.

To the extent that television has a signature noise rooted in the analog— the Big Bang in reruns — any AI’s noise is rooted in the digital. You might expect digital noise to look a certain way: like glitches in satellite television signals, stuttering as information comes in at fractions of a second, in scrambled order, reassembled as a series of starts and stops from whatever gets through first. But these glitches emerge from the entanglement of different transmission and display technologies. Glitched digital photographs are a result of missing and corrupted file information, often in compression systems.

AI generated glitches come from other relationships. More often, AI images don’t glitch, they gloop. They streak and striate. It’s the result, I suspect, of how these systems seek out images from the fuzzy noise they start with. While noise is an end state of a bad television broadcast, it’s the start state of AI images.

They start out with a jpg of random noise and seek paths through adjacent pixels in the fuzz, a highly detailed game of connecting the dots. They correlate these patterns to a kind of visual dictionary of possible sequences. As those lines form the bare outline of a desired shape, it fills in more detailed information. More iterations, more layers; more detail. This search for meaningful neighbors in the pixels forms a meandering line, like the hyphae of a mycelial network under soil, looking to move in directions guided by nutrients. Aside from emptiness, the line is the simplest pattern. Lines touching lines is next. The pixel streaks can be interpreted as a method of connection between loosely associated clusters of pixels, a response to the barest presence of information. When they don’t find more complexity, they would be drawn as if it was a wandering pen. Blobs, meanwhile, are the opposite: a pileup of streaks, clumped like oil paints until textures emerge. These textures are refined and built up over the course of generating layers of detail.

Animate it by cycling through all of these “possible images” and it ends up looking… a lot like a lava lamp.

Interpolation

Latent Space is the space between generated images and any data points they were trained on. If you trained a model on 100,000 images of flowers, it would have the data of those 100,000 images. It would also generate images based on its understanding of what was “between” any two given images in the dataset, which is called interpolation.

Interpolation comes to us from the French, in 1610, where it was used to mean “altering or enlarging (a writing) by inserting new material," suggesting a hint of falsification - a bit like forgery, but not quite. Interpolation in neural nets is often called “dreaming” or “imagining” new images in the language of the developers.

It’s better to think of them as dots on a scatter plot: if there is a dot for 4 and a dot for 6, interpolation says, “ok, what about 5?” Consider the exponential growth of possible “in between spaces” when you have not two images or dimensions in your plot, but 100, or 4 billion. The vast space of this AI-generated in-between-ness is the “latent space,” latent in that it lays dormant in the model — connections unexplored until you go exploring it.

A lot of AI art presents this latent space as videos, cycling through the possible variations of images in the dataset, called Latent Space Walks. Here’s a video of one, an assemblage of photographs of windows. None of the photographs of windows appear in this video, only their AI-generated counterparts.

It is safe to translate some of the hype-speak, then, to say that Refik Anadol’s work is not so much “reimagining the history of modern art and dreaming about what might have been” so much as interpolating that history. It is inserting in-between points, presenting latent — ie, possible images — that “exist” in the space between any two or three or four or four hundred pieces in the MOMA. It renders these works as video, so it is also “rendering” the space between its current state and wherever it needs to go next. As it changes pixels from whatever is on screen to whatever is next in the latent space, the pixels seek common neighbors once again.

The effect of interpolation on video is the illusion of movement, like waves crashing against the shore or a pulsing mushroom brain. I’ve been playing with interpolation lately, as it’s a tool that RunwayML has in its video suite. Interpolation can be quite useful in smoothing out missing frames, by looking at one image in a video, comparing it to the next, and “averaging them out” to fill in the space between them. This allows for incredibly detailed slow motion, for example, though significant portions of that detail are “imaginary,” that is, they’re interpolated — assumed to be there based on the presence of what’s beside them.

Anadol does other stuff with this - the animation pace, response, colors etc, claim to be effected by activity around it. I don’t have much critical to say about the piece, as I haven’t seen it installed in the MOMA. If any of my response reads as criticism, take note that I’m just explaining how it works - if that comes off as criticism, then there’s something very weird going on with the AI art world.

Interpellation

My interest in interpolation is poetic. I’m working on a new piece of video art exploring the idea of interpolation as a representation of desired kinships: closing gaps in connections by finding likenesses between neighbors. Reflecting on the mycelial metaphor, I’m exploring the interpolated frame as a symbiont: a new synthesis of two disconnected entities, meeting and exchanging information. In biomes, some mushrooms meet plants and “close the gaps” through the entanglements of hyphae and root systems. This entanglement extends outward to trees, and beyond.

By rendering mushrooms, plants and trees in an endless exchange of pixel information, animated through this latent-space walk, we can craft an imagery of kinship through interpolation. They appear to be in motion. Instead, we’re seeing the latent space — the space between them — being closed, as the data for “mushroom image” and “flower image” and “tree image” seek out ways to find one another, extending the images of these bodies toward the other.

I’m sharing a short music-video version of this project here, over a new track from an upcoming Organizing Committee record. The actual version is still a work in progress. But I wanted to share it as a reference.

Taken literally, nothing is this film is actually moving. Like all films, it’s a sequence of images. Unlike a film, each frame is interpolated (using a RunwayML AI video tool) by redrawing the original, generated images as a predicted state in between one and the other.

The illusion of motion is created by tightening the visual distance between isolated mushrooms, flowers and trees. That gap closes as pixels move closer to one another, and in our eye we see the action of moving-toward and merging-with.

Thanks to the incredible slow motion possibilities of digital interpolation, a 3-second video of a woman can be extended to three minutes. She began as a series of 90 images rendered by MidJourney as incredibly narrow variations of the same prompt, constrained to produce nearly identical portraits. Watch closely and you’ll see transformations. Her hair changes, her eyelines deepen. The result is a woman perceived on the slower time scale of plants and mushrooms, while the mushrooms and plants appear to move at the speed of human activity.

She and the flora do not connect. All of the mushrooms, flowers and trees are generated with a prompt requesting isolated scientific specimens: living things transformed into data. Disconnected from the network, they are animated as objects reaching for neighbors, seeking kinships, connecting and parting.

The gaze of this woman is meant to suggest the interpellation of the viewer: it’s hard to look away, we feel hailed into the world of the image as it slowly changes from photorealistic to painterly, animated to still. Her gaze lures us in, and we connect to it, the network of kinship occurring in the space between.

The in-between is where the AI does its work. It is filling in new spaces between data points, drawing new frames between disconnected images. So we might better read Anadol’s work as being “about” the in-betweenness of art in the MOMA, presented as permutations of a rich data visualization.

My work here is about the in-betweenness of living systems. Focus on the “arrival points” of AI art, as an observer or practitioner of generated artworks, and you would miss this unique property of the medium. The optimistic lens of AI images is that it represents possibility, seeks to reveal the transformative potential of these adjacent images. Find one data point in the cracks between two and you might find new ways and shapes of being.

These streaks and striations can be read as a glitch. Or they can be the story, one that AI generated films and images can tell particularly well. But I also have to agree with Ben Davis’ critique of AI art as a “willful misreading of dystopia.” Anadol’s work, at a distance, reminds me of the celebration of engines and sports cars: technology presented as art form, in and of itself.

That’s a conceptualization I see in a lot of AI art, where the data visualization is seen as the end point. I don’t want to contrast my work with anyone’s, and there are lots of ways to make AI art. I just know I am less interested in working that way, and am trying to think through generated images themselves as possessing a kind of data-aura. The rendered images were isolated as specimens, as data points, and reflect a mushroom in the same sense that a mushroom, stripped from its network and placed into a filing cabinet, is a mushroom. Which is to say: it is not a mushroom, or a tree, or a flower, but data. It is unnaturally rendered. In the end I hope for this work to be about the longing for worlding, rather than suggesting the AI is a world-maker in any sense beyond the world constrained by its dataset.

In the meanwhile, the tension between the rendered and rendered-from is interesting to explore.

Things I’m Doing (Soon)

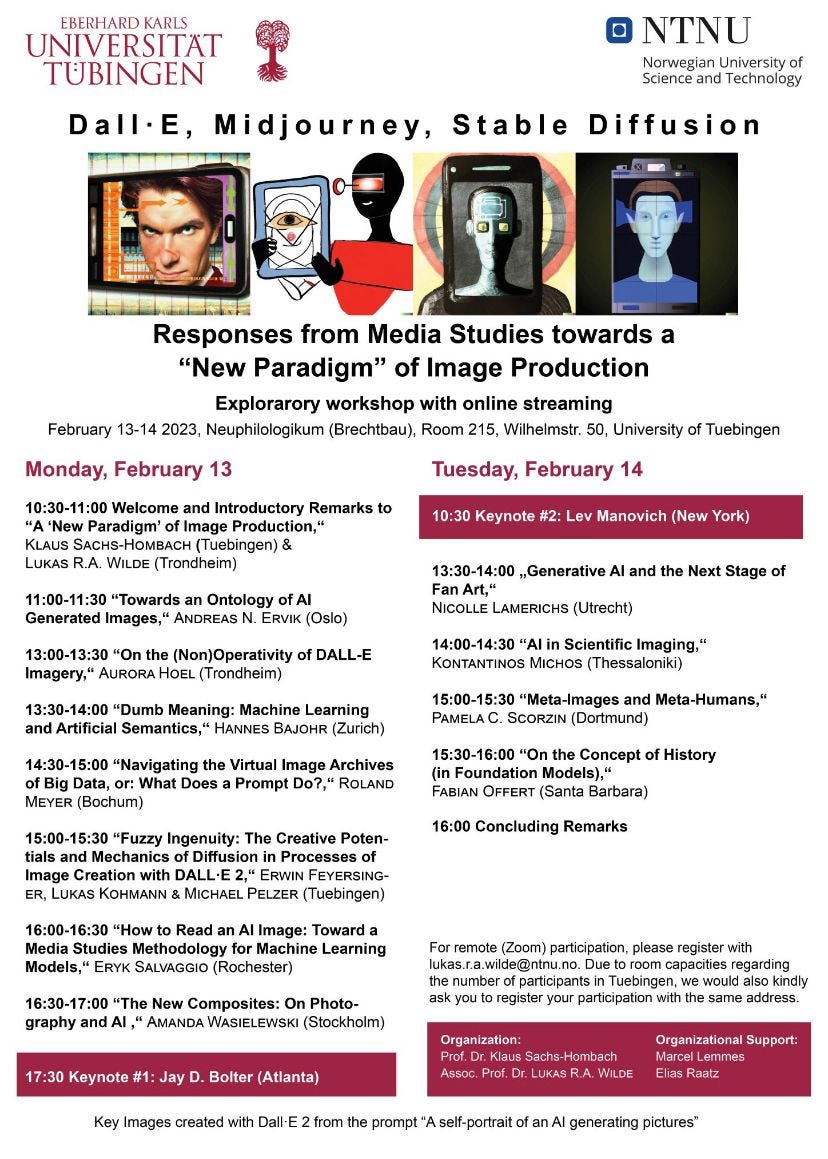

Excited to be presenting “How to Read an AI Image” on February 13 for this excellent 2-day workshop exploring media studies and ontolography (a word I doubt anybody is going to say). You can register to stream over Zoom by emailing lukas.r.a.wilde at ntnu.no. Not sure if there will be recordings, I’ll let you know.

Thanks for reading! As always, I appreciate your sharing and circulating these newsletters with your networks, as word of mouth and social media sharing is the only way this newsletter’s readership grows! You can subscribe if you have not already with the button below, and you can find me on Mastodon or Instagram or Twitter.